Whether you love data or not, A/B testing is important for every ecommerce brand.

It tells you a lot about your customers, helps improve their shopping experience, and impacts your conversion rate. Truthfully, A/B testing is one of the best ways to determine what impact any site changes you're making will have. And as a brand founder or marketer, you don’t want to waste time on making changes that hurt your revenue, right?

Also, you can redesign your entire product page and see conversion rates improve, but if you're not sure if certain elements were more impactful in contributing to that improvement, then that’s not super helpful down the road when you go to make more updates.

Testing allows you to see a measurable impact of specific site changes and lends itself to a more data-driven approach to conversion rate optimization.

Today, we’re going to dive into A/B testing best practices, and show you an example by looking at Live Bearded’s drawer cart test to show you how impactful these changes can be to your online store.

What is A/B testing?

A/B testing is a way to experiment with two or more variables on your website. These variables are shown to different groups of website visitors at the same time to see which version performs better with your target audience. You can test any web page, such as your homepage, product pages, or landing pages. Common items to test are copy, images, CTA buttons, button color, social proof, or other site elements.

How do you decide which version won? It depends on what you’re optimizing for. For example, if you want to see a higher click-through rate and higher conversions on your product pages, then the version with the higher click-through rate wins.

Testing usually runs for two weeks or more. At the end of the test, you’ll update your website with the new version (the winning version). The goal is to collect data to help you make a decision about which version will drive the biggest impact on site performance.

Businesses will also test marketing campaigns to tweak their approach to email subject lines and call-to-action buttons, or even the copy of digital marketing campaigns like Facebook ads.

A/B testing vs split testing vs multivariate testing

A/B testing and split testing are often used interchangeably. The difference between the two is that split testing maximizes the same amount of traffic on both test variations.

On the other hand, multivariate testing is used for testing multiple variables at once. These tests are used to determine which combination of variations performs the best out of all of the possible combinations.

The goal of these tests is similar to a standard A/B test. Maybe you’re trying to see where users drop off from your site to decrease your bounce rate.

Why you should do regular A/B testing

A/B testing takes the guesswork out of making changes to your website. By collecting this data, you can feel more confident about the decisions you make, especially when launching new features or products. Also, it’s helpful to run tests because it can help you make data-driven decisions about your marketing strategy, pricing strategy, customer journey, and website usability.

But it’s more than just adding the right copy to get a user to take a certain action—these tests can show you why customers like your products, what messaging resonates with them the most, the pages with the most opportunity, and how you can even build trust with them.

You can use this information both on and off your website. For example, if you learn that customers engaged more with a specific kind of messaging on your site, then try using it in your emails, paid ads, or organic social media posts.

This was something the founder of Live Bearded Anthony Mink learned after testing a few variations of his cart page. The version that won had messaging in the cart to help build trust with customers, and Anthony learned this kind of messaging resonated best with his customers.

“It's important to build trust and restate your brand promise across every key touchpoint in the funnel because everyone is bombarded with marketing messages and "buy-my-shit" ads than ever before. As a brand, we work hard to understand the exact reasons customers like our products, buy from us, and what is most important to them in the buying process.”

When to A/B test as a Shopify brand

One burning question marketers have: Should you A/B test everything? The answer is no.

It’s best to A/B test certain site changes to confirm they have a positive impact on your metrics. However, the general rule of thumb is that your store should have a minimum of 1,000 transactions per month for the test to yield usable results.

Anything less than that and you likely won’t be able to reach a significant test result—or see a ROI on the investment to set up and monitor the test.

How long should you run an A/B test?

To get a representative sample size, the minimum testing time is 1-2 weeks. Some tests might run up to 3-4 weeks if you have a longer buying cycle or are testing certain shopping behavior.

The truth is it depends on how much time you think you need to feel confident that your results will drive a positive difference.

If you’re testing smaller changes (like updating a supporting landing page or a minor copy edit), you may want to run your test longer than 1-2 weeks. This is because it can take more time to reach statistical significance because these changes get less traffic or have less of an impact on the decision to buy.

On the other hand, more impactful changes—like testing a change to your navigation menu or cart—don’t need as long because these changes get more eyeballs every day, so you can reach significance faster.

3 A/B testing best practices

The trick with A/B testing is that it’s never just one-and-done. There will always be improvements to make across your site—even on items you already A/B tested.

1. Run a variety of testing sizes

Your A/B testing roadmap should include a mix of simple tests that are easy to set up and run (though they may have less of an impact on conversion rate or other KPIs), and bigger tests that require more resources to set up and run, but have a large impact on your KPI's.

This approach allows you to run a lot of tests and iteratively improve the user experience while being efficient with resources.

2. Remember that test results are never permanent

Test results aren't permanent! You can A/B test a drawer cart and see it fail against a traditional cart page then run the same test 12 months later and see different results.

As your traffic mix, website content, and user preferences change, you should continuously re-test certain site elements to see if your results are different than they were when you last ran a test.

3. Watch your timing

Be aware of timing. For example, don't launch an A/B test the same week you're launching a major sale. Those results will likely be skewed and may not be an accurate reflection of typical user behavior.

A/B testing tools we recommend

We’ve done hundreds—if not thousands—of A/B tests for clients here at Fuel Made, so here are our favorite tools we recommend:

- Intelligems: Built specifically for Shopify, minimal front-end friction, a great all-in-one solution.

- Convert.com: Seamless integration with GA4 and AdWords.

A/B Testing Example: Live Bearded

You have the A/B testing tips, now let’s put it all together by going through an example with Live Bearded.

We’ve been working with Live Bearded for a while. We first kicked off our partnership by redesigning their new website, and since then we’ve continued to make ongoing improvements through CRO and testing.

One test we recently ran with them was comparing three different versions of their cart. We were planning to change their /cart page to a slide-out drawer cart since this is quickly becoming best practice on ecommerce sites, but we wanted to make sure the change wouldn’t hurt their conversions.

In the test, we ran two versions of the slide-out cart against the existing /cart page. So, really, think of this test as an A/B/C test.

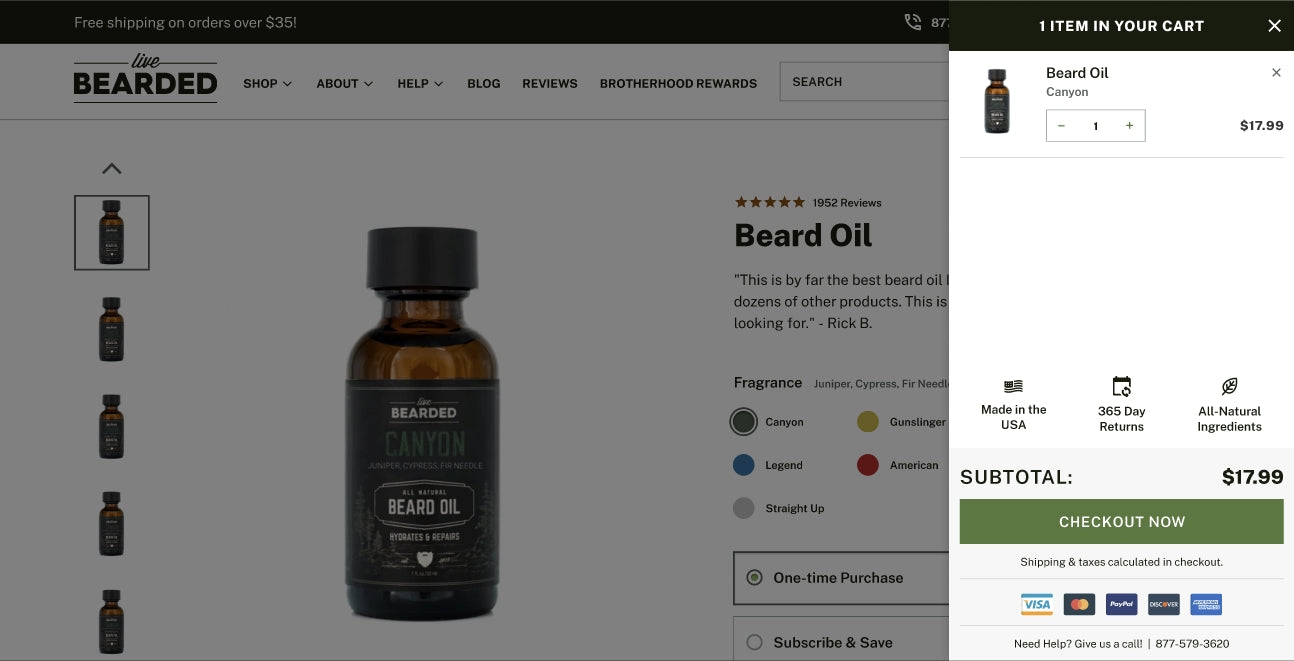

The first version of the slide-out cart included additional iconography and information to help build trust with the customers. This is what the first version looked like:

The second version was more simplified. For all three versions, we reviewed conversion rate as a primary metric and revenue per session as a secondary metric. Here’s what the second version looked like:

“This test is a beautiful reminder of why we must always test and retest critical steps in our funnel. We tested a slide-out cart in 2020 and it was a huge loser for us, so I was a bit hesitant to test it again. But buyers' behaviors change over time, especially as the industry as a whole changes. As a brand, we need to always be questioning assumptions and iterating at the important steps of the funnel.”

- Anthony Mink, CEO

Live Bearded’s A/B test results

At the end of the test, it was clear that the slide-out cart with iconography and trust-building information was the winner. It had a modeled improvement over the baseline of up to 18%.

When we reviewed Live Bearded’s check-out completion rate, we also saw that this slide-out cart had an improvement over the baseline of up to 40%!

Anthony said he found himself surprised by these results: “We have run several other A/B tests against our cart page and we've never been able to beat the control. This time, seeing the drawer cart win was a nice surprise as I do believe it streamlines the checkout process and makes it easier for the user to navigate the site, add additional products, and check out when ready.”

As you can see, even small changes to your website can have a big impact.

Remember, CRO is a never-ending game

Testing and optimizing your website is important to continue finding conversion opportunities, but remember, running a test once doesn’t mean you’re done forever.

“We could have easily passed on this test because all of our drawer cart tests ‘failed’ in the past. However, by looking for constant improvement to our shopping experience, we came back to the cart to search for ways to improve the customer experience, and I'm glad we did. It's a win-win as it makes the customer's experience better and increases conversions for our brand,” explained Anthony.

While testing is an ongoing effort, it’s certainly exciting if you’re a bit of a data nerd. The information you uncover about your customers will continue to bring value to both you and your shoppers.

While it can sometimes be tedious, it’s definitely worth it.